Fairseq is an open-source toolkit for training custom sequence-to-sequence (seq2seq) models for tasks like translation, summarization, and language modeling. Seq2seq models generate output sequences from input sequences, making them essential for AI applications involving language generation.

Key Points:

- Seq2seq Models: Consist of an encoder that converts input to a vector representation and a decoder that generates the output sequence step-by-step.

- Applications: Machine translation, text summarization, speech recognition, chatbots.

- Prerequisites: Knowledge of seq2seq models, PyTorch, deep learning concepts, Python programming.

- Setting Up Fairseq: Install Python, PyTorch, clone Fairseq repo, install dependencies, build and develop.

- Building a Model: Preprocess data, configure model, train model, evaluate performance.

- Advanced Features: Multi-GPU training, fast generation, search algorithms, mixed precision training, customization.

- Troubleshooting: Check installation, data preprocessing, model configuration, analyze performance.

Quick Comparison: Seq2seq Model Applications

| Application | Description |

|---|---|

| Machine Translation | Translate between languages (e.g., English to Spanish) |

| Text Summarization | Generate concise summaries of long documents |

| Speech Recognition | Convert spoken language to written text |

| Chatbots | Generate responses to user inputs for conversational AI |

Prerequisites for Using Fairseq

To get started with Fairseq, you'll need to have a solid understanding of certain technical concepts and programming skills. This section outlines the necessary background knowledge and technical skills required to effectively use Fairseq.

Required Technical Knowledge

Before diving into Fairseq, it's essential to have a good grasp of the following machine learning concepts:

| Concept | Description |

|---|---|

| Sequence-to-sequence models | Understand how Seq2Seq models work, including encoder-decoder architectures and attention mechanisms. |

| PyTorch | Fairseq is built on PyTorch, so having experience with PyTorch is crucial. |

| Deep learning | Familiarize yourself with deep learning concepts, such as convolutional neural networks (CNNs), recurrent neural networks (RNNs), and long short-term memory (LSTM) networks. |

Programming Skills Needed

To implement and manipulate sequence-to-sequence models with Fairseq, you'll need to have:

- Python programming proficiency: Fairseq is a Python library, so you should be comfortable writing Python code and working with Python libraries.

- Familiarity with command-line interfaces: Fairseq provides command-line tools for preprocessing data, training models, and evaluating performance. You should be comfortable using command-line interfaces to execute these tools.

By ensuring you have the necessary technical knowledge and programming skills, you'll be well-prepared to use Fairseq effectively and achieve your sequence-to-sequence modeling goals.

Seq2Seq Model Basics

Sequence-to-sequence (Seq2Seq) models are a fundamental concept in natural language processing (NLP) and machine learning. In this section, we'll explore the basics of Seq2Seq models, including how they work and their real-world applications.

How Seq2Seq Models Work

Seq2Seq models consist of two primary components: an encoder and a decoder. The encoder takes an input sequence and converts it into a fixed-length vector representation. The decoder then takes this vector representation and generates the output sequence, step by step.

During training, the model is trained to minimize the difference between the generated output sequence and the target output sequence.

Real-World Seq2Seq Uses

Seq2Seq models have numerous applications across various domains and industries. Here are some examples:

| Application | Description |

|---|---|

| Machine Translation | Translate languages, such as English to Spanish or French to German. |

| Text Summarization | Summarize long articles or documents into concise summaries. |

| Speech Recognition | Convert spoken language into written text. |

| Chatbots | Generate responses to user inputs, enabling chatbots to engage in conversations. |

These examples illustrate the versatility and effectiveness of Seq2Seq models in various applications where understanding and generating sequences are essential.

Setting Up Fairseq

Setting up Fairseq is a straightforward process that requires some technical knowledge and programming skills. In this section, we will guide you through the installation process of Fairseq and the setup required to start building sequence-to-sequence models.

Installing Fairseq

To install Fairseq, you need to have Python installed on your machine with a version of 3.6 or higher. Additionally, you need to install PyTorch, which is the underlying technology behind Fairseq. You can install PyTorch by cloning it from GitHub or using Anaconda or Chocolatey.

Here are the steps to install Fairseq:

| Step | Command |

|---|---|

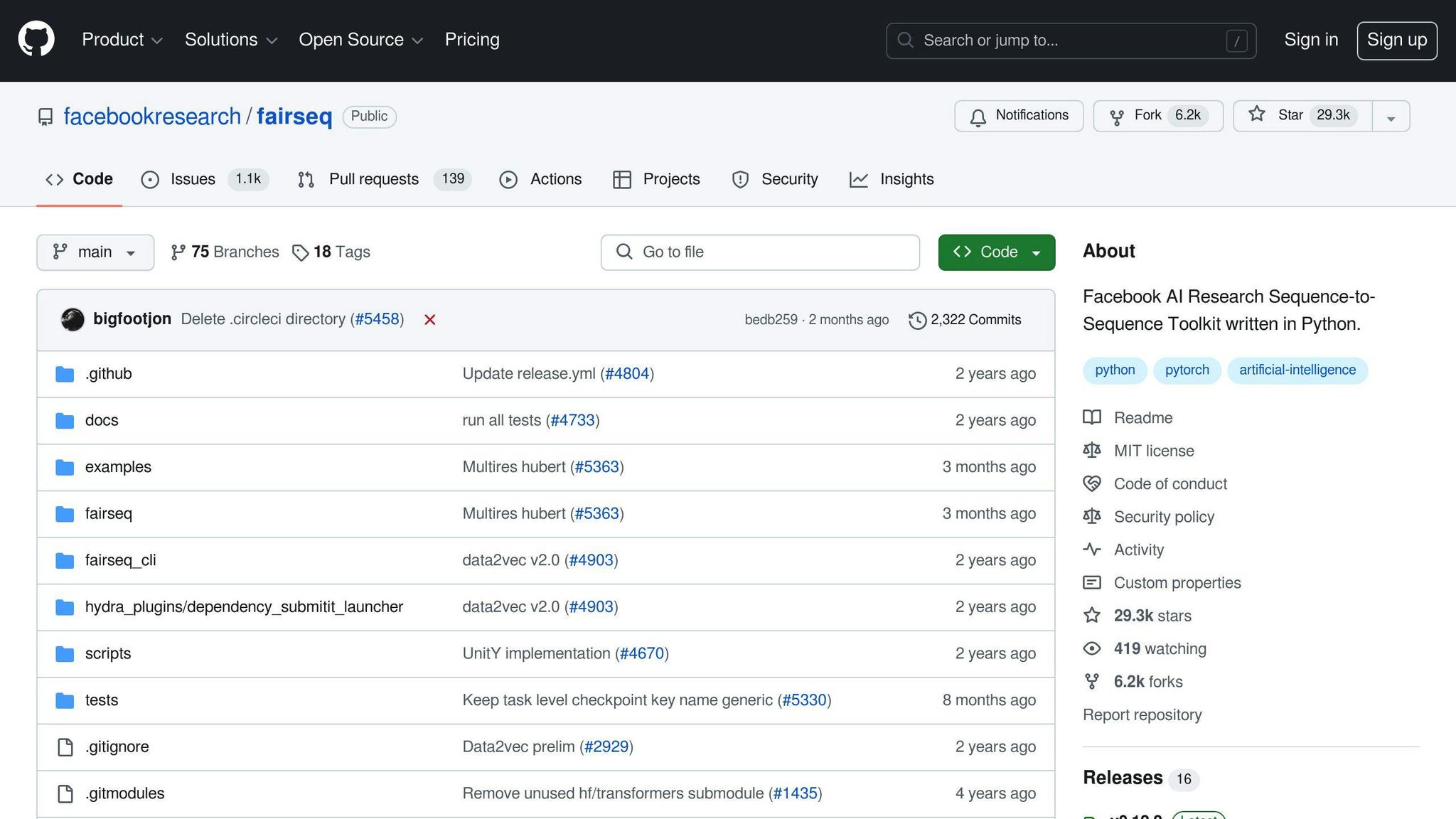

| Clone Fairseq from GitHub | git clone https://github.com/pytorch/fairseq.git |

| Change directory to Fairseq | cd fairseq |

| Install required dependencies | pip install -r requirements.txt |

| Build and develop Fairseq | python setup.py build develop |

After installing Fairseq, you can download pre-trained models and get familiar with the syntax.

Configuring Fairseq Environment

To configure the Fairseq environment, you need to set up your development environment to start building and training sequence-to-sequence models. Here are some key steps to follow:

- Install required dependencies: Install PyTorch and NVIDIA GPU (if you plan to use GPU acceleration).

- Configure environment variables: Set up your environment variables to point to the Fairseq installation directory.

- Set up Python environment: Set up your Python environment to use the Fairseq library.

- Install additional libraries: Install any additional libraries or tools required for your specific use case, such as SentencePiece for tokenization.

By following these steps, you can set up your Fairseq environment and start building sequence-to-sequence models for your NLP tasks.

sbb-itb-f3e41df

Building a Seq2Seq Model with Fairseq

Building a sequence-to-sequence (seq2seq) model with Fairseq involves several steps, from data preprocessing to training and evaluation. In this section, we will guide you through the process of developing a basic seq2seq model using Fairseq.

Seq2Seq Model Development Steps

To develop a seq2seq model with Fairseq, follow these steps:

1. Data Preprocessing: Prepare your dataset by tokenizing the input text into an integer sequence. Fairseq supports various tokenization techniques.

2. Model Configuration: Configure your seq2seq model by specifying the model architecture, hyperparameters, and training settings. Fairseq provides a range of pre-built models.

3. Model Training: Train your seq2seq model using the preprocessed data and configured settings. Fairseq supports distributed training across multiple GPUs and machines.

4. Model Evaluation: Evaluate your trained model using metrics such as BLEU score, perplexity, and accuracy.

Practical Example

Let's consider a practical example of building a machine translation model using Fairseq. Suppose we want to translate English sentences into Spanish. We can use the following code snippet to configure and train our model:

import fairseq

# Load the dataset

dataset = fairseq.load_dataset('wmt14_en_es')

# Configure the model

model = fairseq.SequenceToSequence(

encoder=fairseq.TransformerEncoder(

num_layers=6,

hidden_size=512,

dropout=0.1

),

decoder=fairseq.TransformerDecoder(

num_layers=6,

hidden_size=512,

dropout=0.1

)

)

# Train the model

trainer = fairseq.Trainer(model, dataset, max_updates=10000)

trainer.train()

In this example, we load the WMT14 English-Spanish dataset, configure a transformer-based seq2seq model, and train the model using the Fairseq trainer.

Key Takeaways

| Step | Description |

|---|---|

| Data Preprocessing | Tokenize input text into an integer sequence |

| Model Configuration | Specify model architecture, hyperparameters, and training settings |

| Model Training | Train the seq2seq model using preprocessed data and configured settings |

| Model Evaluation | Evaluate the trained model using metrics such as BLEU score, perplexity, and accuracy |

By following these steps and using Fairseq, you can develop a basic seq2seq model for your NLP tasks.

Advanced Fairseq Features

Key Fairseq Features

Fairseq offers several advanced features that make it a powerful tool for sequence-to-sequence modeling. Here are some of the key features:

| Feature | Description |

|---|---|

| Multi-GPU Training | Train models on multiple GPUs, reducing training time |

| Fast Generation | Generate outputs quickly and efficiently on CPU and GPU |

| Search Algorithms | Determine the best output during generation using beam search, diverse beam search, and sampling |

| Mixed Precision Training | Train models using lower precision data types, reducing memory usage and increasing training speed |

| Extensibility | Customize and extend the framework to meet specific needs through its modular architecture |

Customizing Fairseq

Fairseq's architecture is designed to be highly customizable, allowing you to tailor the framework to fit your specific research or production needs. You can customize Fairseq by:

- Registering additional models, criteria (loss functions), tasks, optimizers, and learning rate schedulers

- Using Hydra, a configuration management tool, to easily customize and experiment with different configurations

By leveraging these advanced features and customization options, you can unlock the full potential of Fairseq and develop complex sequence-to-sequence models that meet your specific needs.

Troubleshooting Fairseq

Common Fairseq Issues

When working with Fairseq, you may encounter some common issues that can hinder your progress. Here are some practical tips to help you avoid common errors and improve model performance:

| Issue | Solution |

|---|---|

| Invalid choice of architecture | Refer to the Fairseq documentation for a list of supported architectures. |

| Incorrect installation | Verify that you have installed Fairseq correctly, including all required dependencies. Check the installation logs for any errors. |

| Data preprocessing | Make sure that your data is properly preprocessed and formatted for Fairseq. This includes tokenization, batching, and padding. |

Advanced Troubleshooting

For more complex issues, here are some advanced troubleshooting strategies:

1. Debugging Fairseq-train: Use a debugger or IDE to step through the fairseq-train script and identify the source of the error.

2. Checking model configuration: Verify that your model configuration is correct, including hyperparameters, optimizer settings, and loss functions.

3. Analyzing model performance: Use metrics such as perplexity, accuracy, and F1 score to analyze your model's performance and identify areas for improvement.

By following these troubleshooting tips, you can overcome common obstacles and develop high-performing sequence-to-sequence models with Fairseq.

Conclusion and Next Steps

Key Takeaways

In this comprehensive guide, we've covered the essential concepts and techniques for getting started with sequence-to-sequence models using Fairseq. We've explored the basics of Fairseq, its features, and capabilities, as well as how to set up and configure the environment for sequence-to-sequence modeling. We've also delved into building a seq2seq model with Fairseq, troubleshooting common issues, and advanced features for customizing and optimizing model performance.

Continuing Your Fairseq Journey

To further develop your skills in sequence-to-sequence modeling with Fairseq, consider the following steps:

| Step | Description |

|---|---|

| Explore Fairseq Documentation | Dive deeper into the official Fairseq documentation to learn more about its features, APIs, and best practices. |

| Join the Fairseq Community | Connect with other developers, researchers, and practitioners in the Fairseq community forum to stay updated with the latest developments and advancements. |

| Tutorials and Guides | Explore additional tutorials and guides on sequence-to-sequence modeling with Fairseq, covering topics such as language translation, text summarization, and chatbots. |

| Collaborative Projects | Participate in collaborative projects and open-source initiatives that utilize Fairseq, such as machine translation, language modeling, and text generation. |

| Cybersecurity | For example, platforms like Censinet RiskOps enhance AI governance by unifying ethical oversight and compliance, ensuring responsible sequence-to-sequence model deployment without complex setups. |

By continuing to learn and explore the capabilities of Fairseq, you'll be well-equipped to tackle complex sequence-to-sequence modeling tasks and stay at the forefront of AI innovation.

FAQs

What does Fairseq preprocess do?

Fairseq preprocesses data to prepare it for model training. This step converts the data into a format that Fairseq can work with. The fairseq-preprocess function creates a dictionary for each language, which contains the most common words and their frequencies.

How to get a specific module out of Fairseq?

To access a specific Fairseq module, you need to know its name. Add the module name to the end of fairseq.modules.. For example, to get the AdaptiveInput module, use fairseq.modules.AdaptiveInput.

Why are dictionaries required in Fairseq?

Dictionaries are essential in Fairseq because they store the results of training data. This allows for efficient retrieval of the data. You can store multiple values in a single dictionary with unique key-value pairs.

How to use Fairseq in inference mode?

To use Fairseq in inference mode, follow the correct command and ensure you have the necessary modules installed. If you encounter errors, check your installation and module imports.

How to troubleshoot common Fairseq issues?

To troubleshoot common Fairseq issues, check your installation, module imports, and configuration. Ensure you have the necessary dependencies installed and your environment is correctly set up. If you encounter errors, refer to the official Fairseq documentation and community forums for support.